Let me start off this article on the right foot by saying dashboards are not dead. It might feel that they may be losing importance as AI becomes a part of the tools used to understand our data. But that’s a good thing! Let me explain why.

Why Use Dashboards At All?

When I started my career, I worked as a Data Analyst and I loved it. I loved using SQL to transform the data, which mostly either ended up in an Excel report, Tableau dashboard, or in IBM Cognos.

The primary way that information I transformed was presented was using Tableau dashboards. As I became more experienced, my dashboards became more intricate. Before I left that job, I had built an overly complicated super feature rich dashboard for the executive team. Every page was chock-full of filters which had so many combinations there is no way every piece of data would ever be seen. We’re talking millions of possibilities.

Despite having all of these filters, there was always a need for another filter or some information that wasn’t there. Eventually people were talking about how we needed a tool to go through all of the options and select the best one.

The reason I describe this situation was because it was not the correct use-case for the dashboard. I had fallen prey to the rookie mistake — trying to appease everyone with so much information that in the end it has too much information and really appeases no one.

My most successful dashboard since I have left, per my colleagues, seems to be the one that was the most clear and concise with actionable insights built for a specific purpose. Not as complicated and not as many filters, but exactly what the team needed.

So why build dashboards at all? Because they are absolutely the right solution given the right context.

Building things that nobody wants is terrible. I’ve experienced it and it’s a tough lesson to learn, but a fundamental ‘right of passage’ for analysts in my opinion. We need to learn what not to build, so we can build what is most important.

The Democratization of Data

There are many ways to interact with information and the most democratized version is the humble spreadsheet. Excel revolutionized data analytics by putting the power in the hands of every user to transform data into what they need. In just a short span of 11 years, from 1985 to 1996, Excel gained 30 million users¹. A quote from a Microsoft post at the time from KPMG explains why they use Excel, they said,

“Microsoft Excel is now the spreadsheet of choice for our business. It is an analytical engine that is both powerful and easy to use, and it has become a vital tool for us in productive client service delivery.”

I feel that we are currently at a moment similar to the advent of Excel and computer spreadsheets in regards to AI and data analytics. Yes, AI is still improving. Yes, AI has hallucinations at times. And yes, AI can definitely be used for data analytics. Using AI for data analytics can absolutely be the right solution given the right context (and yes, pun intended).

My Experience Building Reliable SQL ETL Pipelines

Before I left my job as a data analyst, I interviewed at the same company for a data scientist position. In the interview, I was supposed to use linear regression for a problem. I was so scarred by some SQL missteps as a data analyst, that I asked multiple times, “Can we assume this data has no duplicates though?”. The exasperated interviewers said, “Forget about the duplicates, there are no duplicates in these datasets”. Needless to say, I didn’t get the data scientist job there.

I kept interviewing and soon after started working for a startup as a data scientist. What I loved about this startup was their approach to data transformation. This startup had ETL pipelines using Python + Jinja + SQL; they had their own homegrown ‘dbt-like’ software that made everything much more programmatic. I was also happy to go from folders with my unversioned SQL scripts to the warm embrace of versioned code in Github (to my former employer’s credit they were trying to get analysts to use Bitbucket when I left).

What I really appreciated about this startup was they had a programmatic solution for duplicates! Instead of repeatedly checking my code over and over to make sure no duplicates snuck in, they used a stored procedure when creating tables to check if the output followed a primary key definition defined via regex. They also had other convenience features such as null checks and completeness checks. These features are definitely required for building robust ETL pipelines.

This One Goes Out to the AI Skeptics

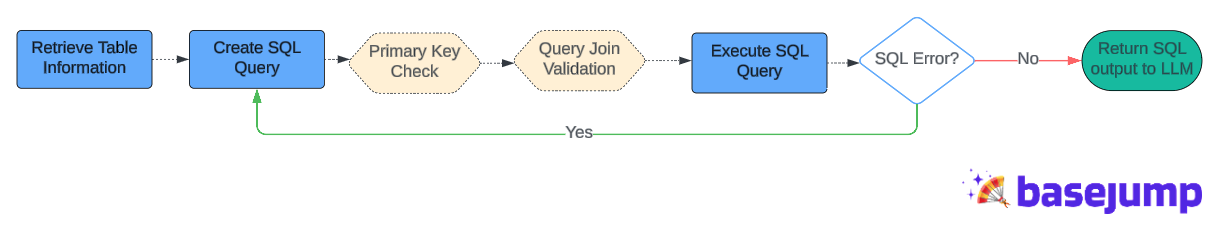

When building solutions based on LLM workflows, it’s important to understand the LLM limitations and how to overcome them. LLMs have a tendency to hallucinate so you need RAG in order to inform the LLM output. For our solution at Basejump AI, we use Agentic RAG — our AI is put into a loop that then finds the correct SQL query for your particular inquiry. This SQL can be validated before being output, similar to how typical ETL workflows can use primary key checks, the AI output can have the same checks along with checks on the joins between tables to ensure it is being done correctly.

LLMs have quickly advanced on SQL benchmarks such as Spider, going from 53% to over 90% in just 3 years². The group that created Spider recently created a more challenging benchmark to address the improvement in LLMs called Spider 2.0³. Other benchmarks such as BIRD⁴ have also been created to address the LLM not only getting the correct answer, but getting the most efficient answer. BIRD also introduces more messy data that the LLM has to handle to provide an accurate response.

All of this to say is that LLMs are rapidly advancing, but despite the progress, there are plenty of limits to LLM capabilities. That is why the following is needed for an accurate and trusted enterprise RAG implementation:

- SQL Query Checks

- Human in the loop iteration with ability to self-verify

- Verification of outputs by those with SQL knowledge

- Strong semantic/metadata layer to help provide context to the LLM

Where Do We Go From Here?

I am convinced that similar to Excel, we will see rapid adoption of LLMs for analytical tasks since it democratizes data access, most notably by making the database accessible to non-coders.

The same quote from KPMG explaining the reason for Excel’s rise in popularity will likely be used to explain why LLMs are an essential tool for meeting analytical needs as well:

"It is an analytical engine that is both powerful and easy to use, and it has become a vital tool for us in productive client service delivery.”

Interested in learning more?